Right now we have following fields in Product Table:

create_table "products", force: :cascade do |t|

t.string "title", null: false

t.text "description"

t.string "category"

t.string "color"

t.string "size", limit: 10

t.decimal "mrp", precision: 7, scale: 2

t.decimal "discount", precision: 7, scale: 2

t.decimal "rating", precision: 2, scale: 1

t.datetime "created_at", null: false

t.datetime "updated_at", null: false

end

If you examine the above table, there will be repetitive product items if consider for a size of an item there comes so many colours. We need to create different product rows for each size different colours.

So Let’s split the table into two.

1. Product Table

class CreateProducts < ActiveRecord::Migration[8.0]

def change

def change

create_table :products do |t|

t.string :name

t.text :description

t.string :category # women, men, kids, infants

t.decimal :rating, precision: 2, scale: 1, default: 0.0

t.timestamps

end

add_index :products, :category

end

end

end

2. Product Variant Table

class CreateProductVariants < ActiveRecord::Migration[8.0]

def change

create_table :product_variants do |t|

t.references :product, null: false, foreign_key: true

t.string :sku, null: false

t.decimal :price, precision: 10, scale: 2

t.string :size

t.string :color

t.integer :stock_quantity, default: 0

t.jsonb :specs, default: {}, null: false

t.timestamps

end

# GIN index for fast JSONB attribute searching

add_index :product_variants, :specs, using: :gin

add_index :product_variants, [ :product_id, :size, :color ], unique: true

add_index :product_variants, :sku, unique: true

end

end

Data normalization is a core concept in database design that helps organize data efficiently, eliminate redundancy, and ensure data integrity.

🔍 What Is Data Normalization?

Normalization is the process of structuring a relational database in a way that:

- Reduces data redundancy (no repeated data)

- Prevents anomalies in insert, update, or delete operations

- Improves data integrity

It breaks down large, complex tables into smaller, related tables and defines relationships using foreign keys.

🧐 Why Normalize?

| Problem Without Normalization | How Normalization Helps |

|---|---|

| Duplicate data everywhere | Moves repeated data into separate tables |

| Inconsistent values | Enforces rules and relationships |

| Hard to update data | Isolates each concept so it’s updated once |

| Wasted storage | Reduces data repetition |

📚 Normal Forms (NF)

Each Normal Form (NF) represents a level of database normalization. The most common are:

🔸 1NF – First Normal Form

- Eliminate repeating groups

- Ensure each column has atomic (indivisible) values

Bad Example:

CREATE TABLE Orders (

order_id INT,

customer_name VARCHAR,

items TEXT -- "Shirt, Pants, Hat"

);

Fixed (1NF):

CREATE TABLE OrderItems (

order_id INT,

item_name VARCHAR

);

🔸 2NF – Second Normal Form

- Must be in 1NF

- Remove partial dependencies (when a non-key column depends only on part of a composite key)

Example: If a table has a composite key (student_id, course_id), and student_name depends only on student_id, it should go into a separate table.

🔸 3NF – Third Normal Form

- Must be in 2NF

- Remove transitive dependencies (non-key columns depending on other non-key columns)

Example:

CREATE TABLE Employees (

emp_id INT PRIMARY KEY,

emp_name VARCHAR,

dept_name VARCHAR,

dept_location VARCHAR

);

Here, dept_location depends on dept_name, not emp_id — so split it:

Normalized:

CREATE TABLE Departments (

dept_name VARCHAR PRIMARY KEY,

dept_location VARCHAR

);

💡 Real-World Example

Let’s say you have this table:

Orders(order_id, customer_name, customer_email, product1, product2, product3)

Problems:

- Repeating columns (

product1,product2, …) - Redundant customer data in each order

Normalized Version:

Customers(customer_id, name, email)Orders(order_id, customer_id)OrderItems(order_id, product_id)Products(product_id, name, price)

⚖️ Normalization vs. Denormalization

- ✅ Normalization = Good for consistency, long-term maintenance

- ⚠️ Denormalization = Good for performance in read-heavy systems (like reporting dashboards)

Use normalization as a default practice, then selectively denormalize if performance requires it.

Delete button example (Rails 7+)

<%= link_to "Delete Product",

@product,

data: { turbo_method: :delete, turbo_confirm: "Are you sure you want to delete this product?" },

class: "inline-block px-4 py-2 bg-red-100 text-red-600 border border-red-300 rounded-md hover:bg-red-600 hover:text-white font-semibold transition duration-300 transform hover:scale-105" %>

💡 What’s Improved:

data: { turbo_confirm: ... }ensures compatibility with Turbo (Rails 7+).- Better button-like appearance (

bg,px,py,rounded, etc.). - Hover effects and transitions for a smooth UI experience.

Add Brand to products table

Let’s add brand column to the product table:

✗ rails g migration add_brand_to_products brand:string:

index

class AddBrandToProducts < ActiveRecord::Migration[8.0]

def change

# Add 'brand' column

add_column :products, :brand, :string

# Add index for brand

add_index :products, :brand

end

end

❗️Important Note:

❌ PostgreSQL does not support BEFORE or AFTER when adding a column.

Caused by:

PG::SyntaxError: ERROR: syntax error at or near "BEFORE" (PG::SyntaxError)

LINE 1: ...LTER TABLE products ADD COLUMN brand VARCHAR(255) BEFORE des...

- PostgreSQL (default in Rails) does not support column order (they’re always returned in the order they were created).

- If you’re using MySQL, you could use raw SQL for positioning as shown below.

If I USE MySQL, I would like to see the brand name as first column of the table products. You can do that by changing the migration to:

class AddBrandToProducts < ActiveRecord::Migration[8.0]

def up

execute "ALTER TABLE products ADD COLUMN brand VARCHAR(255) BEFORE description;"

add_index :products, :brand

end

def down

remove_index :products, :brand

remove_column :products, :brand

end

end

Reverting Previous Migrations

You can use Active Record’s ability to rollback migrations using the revert method:

require_relative "20121212123456_example_migration"

class FixupExampleMigration < ActiveRecord::Migration[8.0]

def change

revert ExampleMigration

create_table(:apples) do |t|

t.string :variety

end

end

end

The revert method also accepts a block of instructions to reverse. This could be useful to revert selected parts of previous migrations.

Reference: https://guides.rubyonrails.org/active_record_migrations.html#reverting-previous-migrations

Product Index Page after applying NF:

Product Show Page after applying NF:

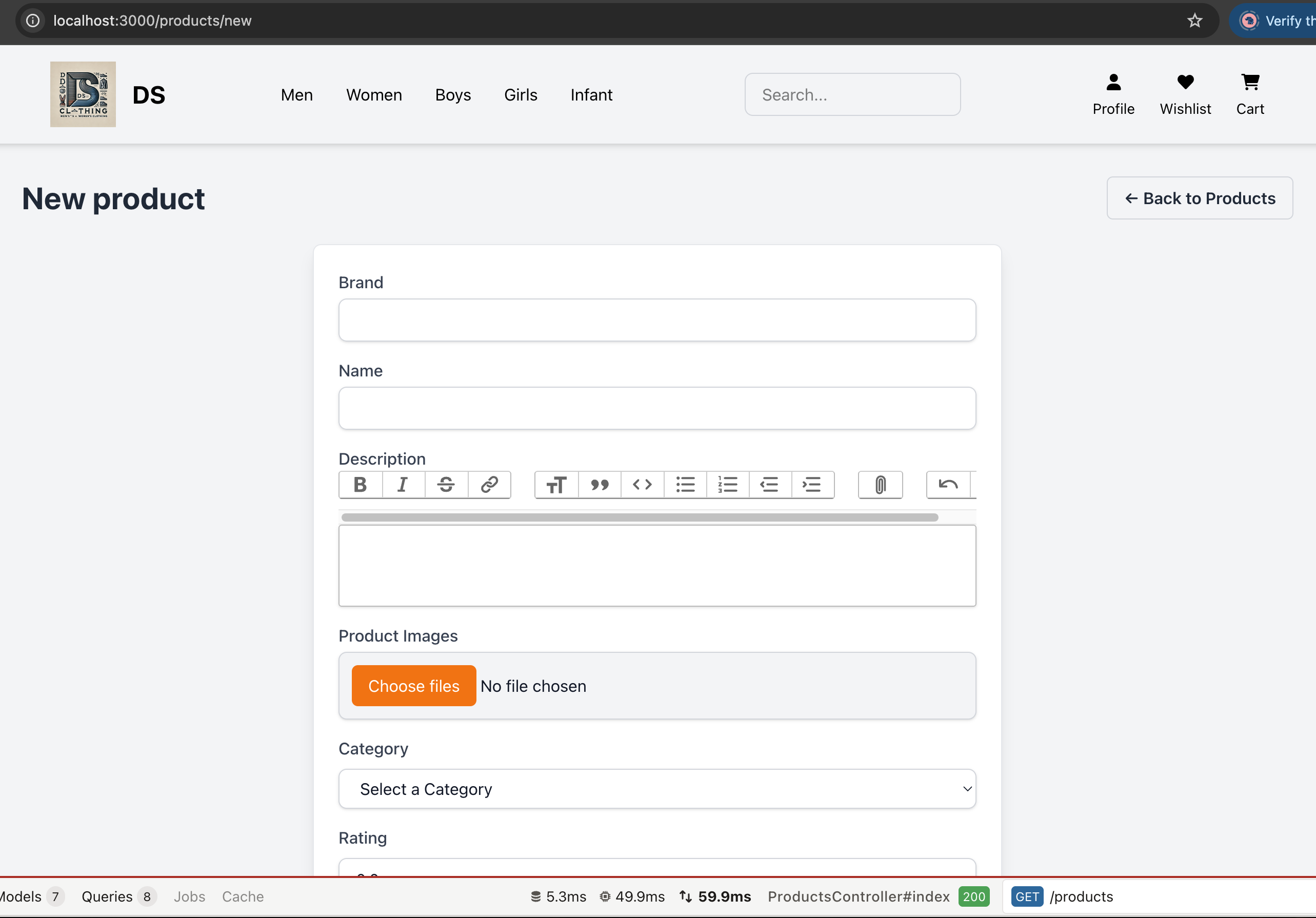

New Product Page after applying NF:

to be continued.. 🚀