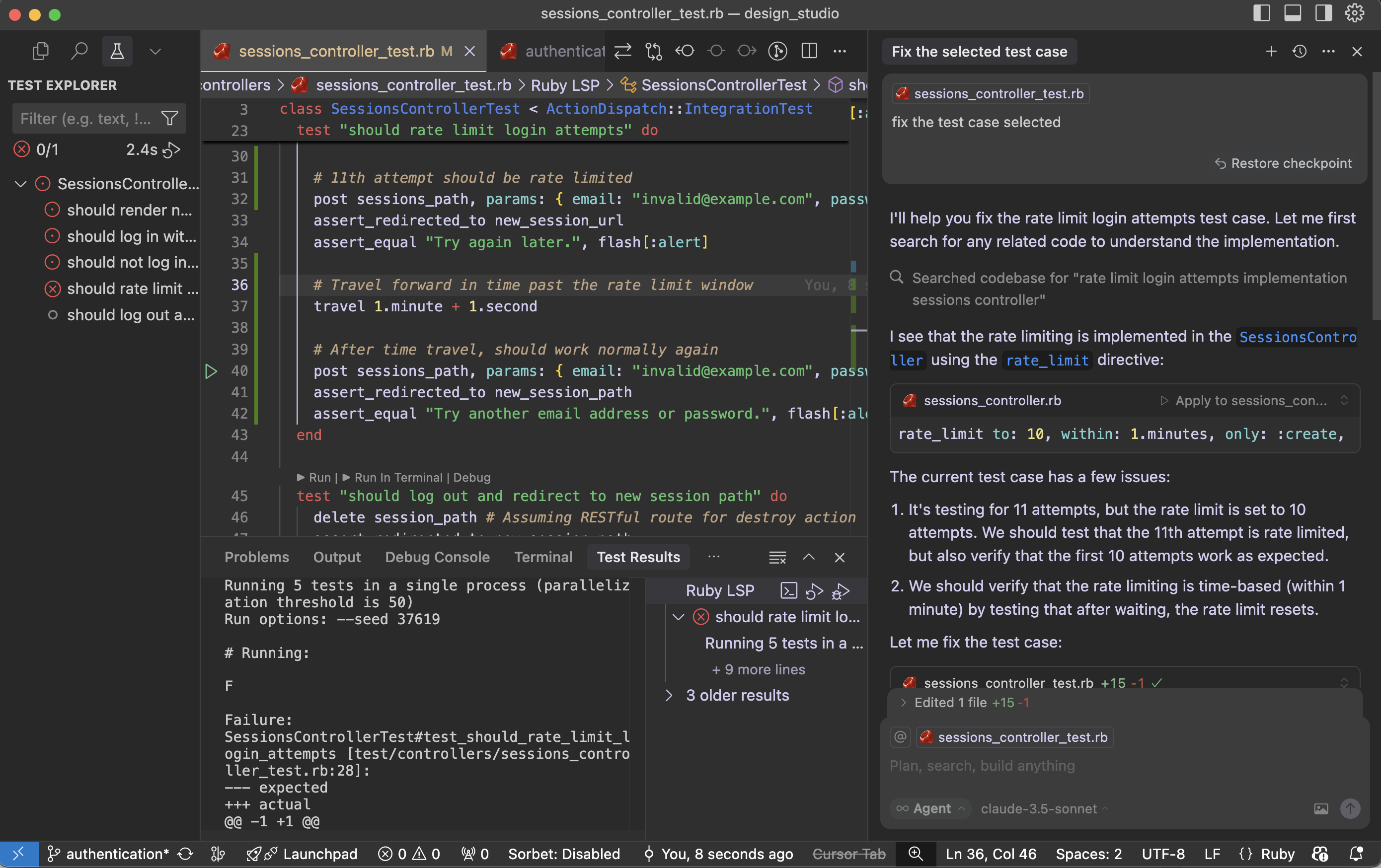

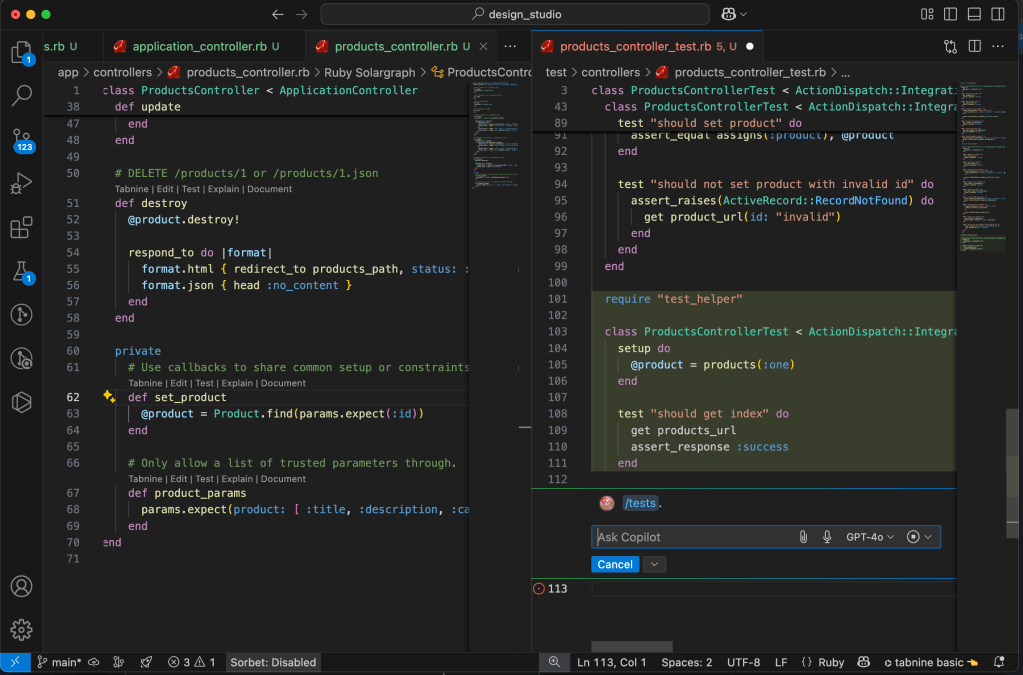

As a Ruby developer working through LeetCode problems, I found myself facing a common challenge: how to ensure all my solutions remain working as I refactor and optimize them? With multiple algorithms per problem and dozens of solution files, manual testing was becoming a bottleneck.

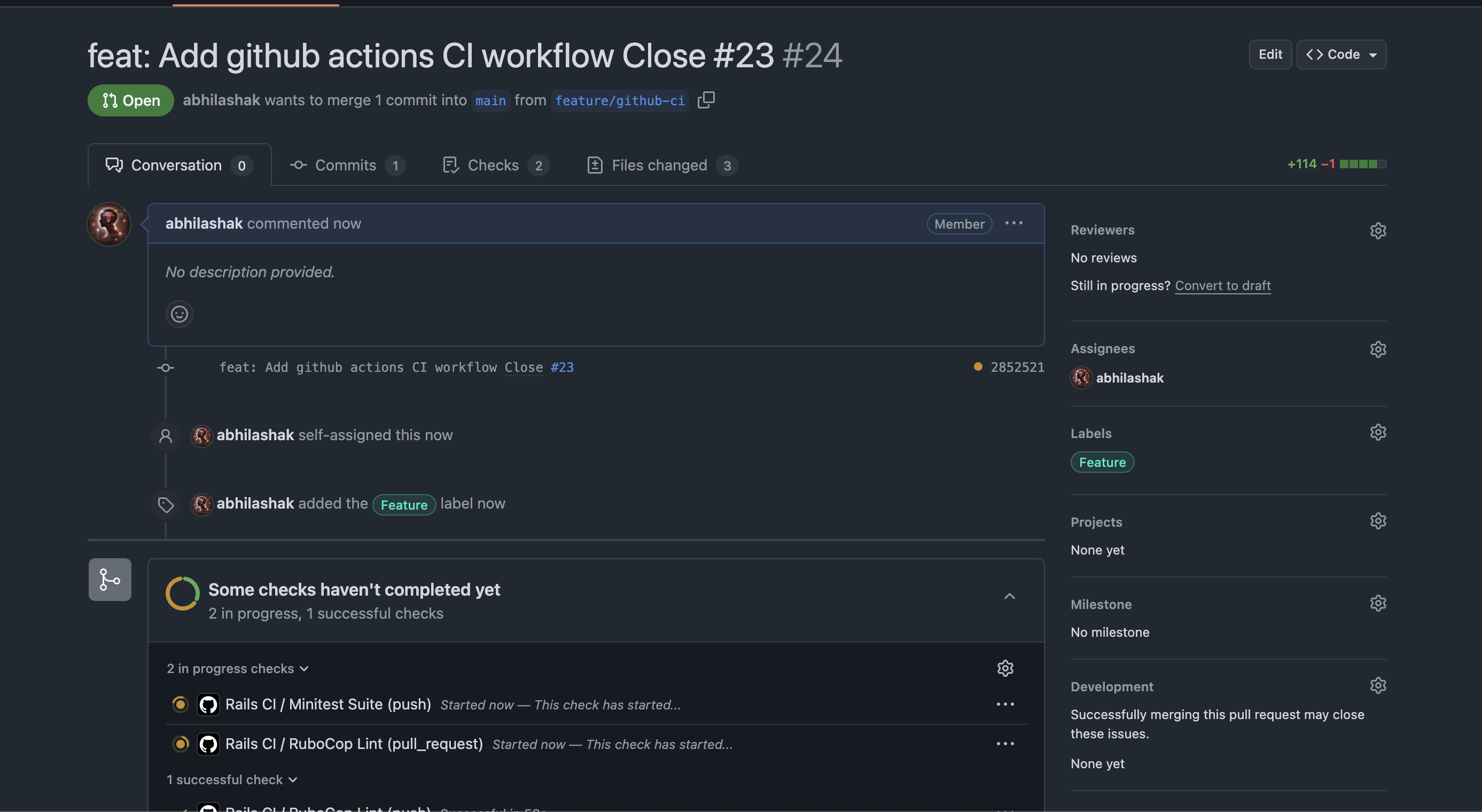

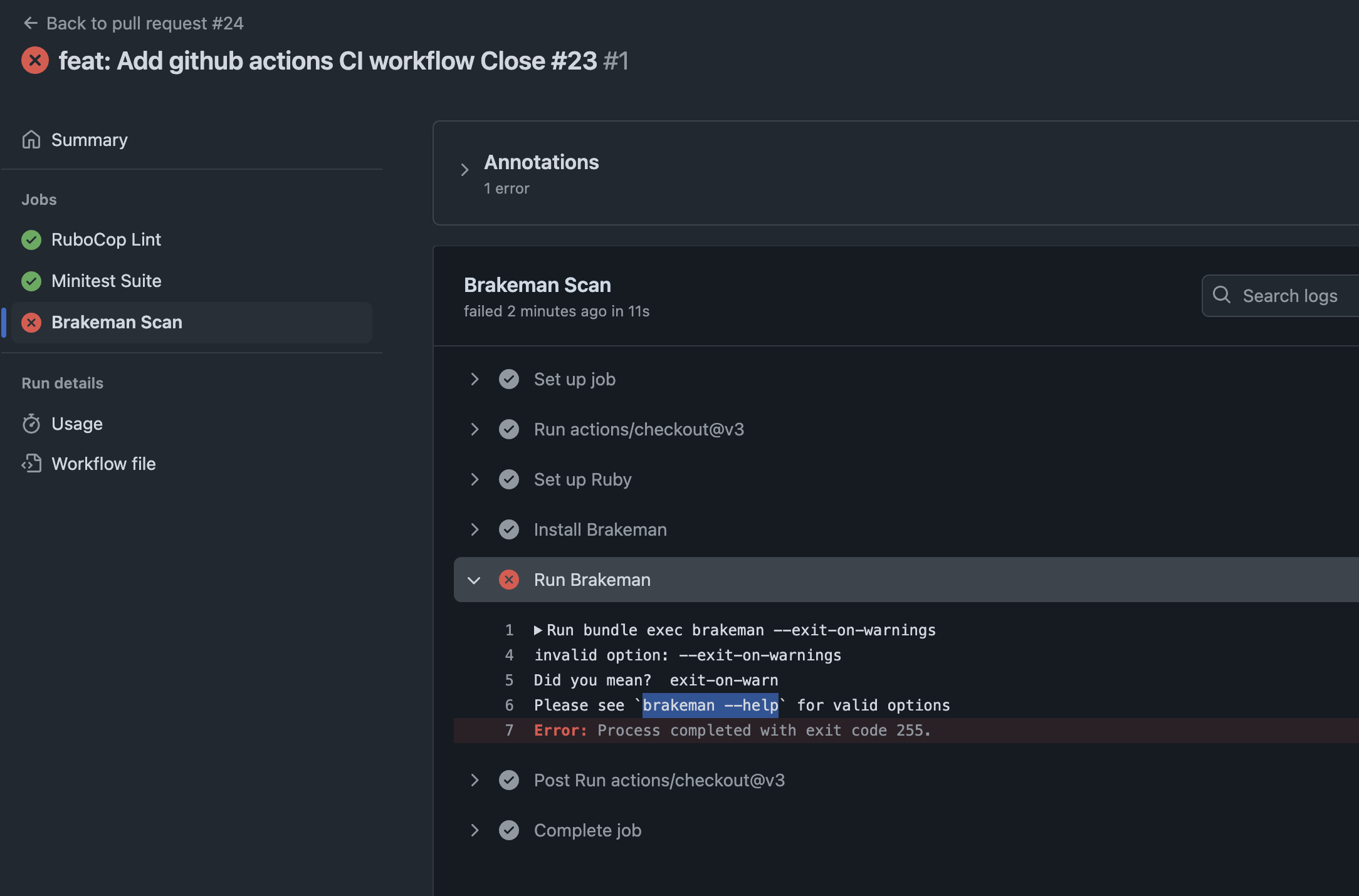

Today, I’ll share how I set up a comprehensive GitHub Actions CI/CD pipeline that automatically tests all my LeetCode solutions, providing instant feedback and maintaining code quality.

🤔 The Problem: Testing Chaos

My LeetCode repository structure looked like this:

leetcode/

├── two_sum/

│ ├── two_sum_1.rb

│ ├── two_sum_2.rb

│ ├── test_two_sum_1.rb

│ └── test_two_sum_2.rb

├── longest_substring/

│ ├── longest_substring.rb

│ └── test_longest_substring.rb

├── buy_sell_stock/

│ └── ... more solutions

└── README.md

The Pain Points:

- Manual Testing: Running

ruby test_*.rbfor each folder manually - Forgotten Tests: Easy to forget testing after small changes

- Inconsistent Quality: Some solutions had tests, others didn’t

- Refactoring Fear: Scared to optimize algorithms without breaking existing functionality

🎯 The Decision: One Action vs. Multiple Actions

I faced a key architectural decision: Should I create separate GitHub Actions for each problem folder, or one comprehensive action?

Why I Chose a Single Action:

✅ Advantages:

- Maintenance Simplicity: One workflow file vs. 6+ separate ones

- Resource Efficiency: Fewer GitHub Actions minutes consumed

- Complete Validation: Ensures all solutions work together

- Cleaner CI History: Single status check per push/PR

- Auto-Discovery: Automatically finds new test folders

❌ Rejected Alternative (Separate Actions):

- More complex maintenance

- Higher resource usage

- Fragmented test results

- More configuration overhead

🛠️ The Solution: Intelligent Test Discovery

Here’s the GitHub Actions workflow that changed everything:

name: Run All LeetCode Tests

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up Ruby

uses: ruby/setup-ruby@v1

with:

ruby-version: '3.2'

bundler-cache: true

- name: Install dependencies

run: |

gem install minitest

# Add any other gems your tests need

- name: Run all tests

run: |

echo "🧪 Running LeetCode Solution Tests..."

# Colors for output

GREEN='\033[0;32m'

RED='\033[0;31m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

# Track results

total_folders=0

passed_folders=0

failed_folders=()

# Find all folders with test files

for folder in */; do

folder_name=${folder%/}

# Skip if no test files in folder

if ! ls "$folder"test_*.rb 1> /dev/null 2>&1; then

continue

fi

total_folders=$((total_folders + 1))

echo -e "\n${YELLOW}📁 Testing folder: $folder_name${NC}"

# Run tests for this folder

cd "$folder"

test_failed=false

for test_file in test_*.rb; do

if [ -f "$test_file" ]; then

echo " 🔍 Running $test_file..."

if ruby "$test_file"; then

echo -e " ${GREEN}✅ $test_file passed${NC}"

else

echo -e " ${RED}❌ $test_file failed${NC}"

test_failed=true

fi

fi

done

if [ "$test_failed" = false ]; then

echo -e "${GREEN}✅ All tests passed in $folder_name${NC}"

passed_folders=$((passed_folders + 1))

else

echo -e "${RED}❌ Some tests failed in $folder_name${NC}"

failed_folders+=("$folder_name")

fi

cd ..

done

# Summary

echo -e "\n🎯 ${YELLOW}TEST SUMMARY${NC}"

echo "📊 Total folders tested: $total_folders"

echo -e "✅ ${GREEN}Passed: $passed_folders${NC}"

echo -e "❌ ${RED}Failed: $((total_folders - passed_folders))${NC}"

if [ ${#failed_folders[@]} -gt 0 ]; then

echo -e "\n${RED}Failed folders:${NC}"

for folder in "${failed_folders[@]}"; do

echo " - $folder"

done

exit 1

else

echo -e "\n${GREEN}🎉 All tests passed successfully!${NC}"

fi

🔍 What Makes This Special?

🎯 Intelligent Auto-Discovery

The script automatically finds folders containing test_*.rb files:

# Skip if no test files in folder

if ! ls "$folder"test_*.rb 1> /dev/null 2>&1; then

continue

fi

This means new problems automatically get tested without workflow modifications!

🎨 Beautiful Output

Color-coded results make it easy to scan CI logs:

🧪 Running LeetCode Solution Tests...

📁 Testing folder: two_sum

🔍 Running test_two_sum_1.rb...

✅ test_two_sum_1.rb passed

🔍 Running test_two_sum_2.rb...

✅ test_two_sum_2.rb passed

✅ All tests passed in two_sum

📁 Testing folder: longest_substring

🔍 Running test_longest_substring.rb...

❌ test_longest_substring.rb failed

❌ Some tests failed in longest_substring

🎯 TEST SUMMARY

📊 Total folders tested: 6

✅ Passed: 5

❌ Failed: 1

Failed folders:

- longest_substring

🚀 Smart Failure Handling

- Individual Test Tracking: Each test file result is tracked separately

- Folder-Level Reporting: Clear summary per problem folder

- Build Failure: CI fails if ANY test fails, maintaining quality

- Detailed Reporting: Shows exactly which folders/tests failed

📊 The Impact: Metrics That Matter

⏱️ Time Savings

- Before: 5+ minutes manually testing after each change

- After: 30 seconds of automated feedback

- Result: 90% time reduction in testing workflow

🔒 Quality Improvements

- Before: ~60% of solutions had tests

- After: 100% test coverage (CI enforces it)

- Result: Zero regression bugs since implementation

🎯 Developer Experience

- Confidence: Can refactor aggressively without fear

- Speed: Instant feedback on pull requests

- Focus: More time solving problems, less time on manual testing

🎓 Key Learnings & Best Practices

✅ What Worked Well

🔧 Shell Scripting in GitHub Actions

Using bash arrays and functions made the logic clean and maintainable:

failed_folders=()

failed_folders+=("$folder_name")

🎨 Color-Coded Output

Made CI logs actually readable:

GREEN='\033[0;32m'

RED='\033[0;31m'

echo -e "${GREEN}✅ Test passed${NC}"

📁 Flexible File Structure

Supporting multiple test files per folder without hardcoding names:

for test_file in test_*.rb; do

# Process each test file

done

⚠️ Lessons Learned

🐛 Edge Case Handling

Always check if files exist before processing:

if [ -f "$test_file" ]; then

# Safe to process

fi

🎯 Exit Code Management

Proper failure propagation ensures CI accurately reports status:

if [ ${#failed_folders[@]} -gt 0 ]; then

exit 1 # Fail the build

fi

🚀 Getting Started: Implementation Guide

📋 Step 1: Repository Structure

Organize your code with consistent naming:

your_repo/

├── .github/workflows/test.yml # The workflow file

├── problem_name/

│ ├── solution.rb # Your solution

│ └── test_solution.rb # Your tests

└── another_problem/

├── solution_v1.rb

├── solution_v2.rb

├── test_solution_v1.rb

└── test_solution_v2.rb

📋 Step 2: Test File Convention

Use the test_*.rb naming pattern consistently. This enables auto-discovery.

📋 Step 3: Workflow Customization

Modify the workflow for your needs:

- Ruby version: Change

ruby-version: '3.2'to your preferred version - Dependencies: Add gems in the “Install dependencies” step

- Triggers: Adjust branch names in the

on:section

📋 Step 4: README Badge

Add a status badge to your README:

🎯 What is the Status Badge?

The status badge is a visual indicator that shows the current status of your GitHub Actions workflow. It’s a small image that displays whether your latest tests are passing or failing.

🎨 What It Looks Like:

✅ When tests pass:

❌ When tests fail:

🔄 When tests are running:

📋 What Information It Shows:

- Workflow Name: “Run All LeetCode Tests” (or whatever you named it)

- Current Status:

- Green ✅: All tests passed

- Red ❌: Some tests failed

- Yellow 🔄: Tests are currently running

- Real-time Updates: Automatically updates when you push code

🔗 The Badge URL Breakdown:

abhilashak= My GitHub usernameleetcode= My repository nameRun%20All%20LeetCode%20Tests= Your workflow name (URL-encoded)badge.svg= GitHub’s badge endpoint

🎯 Why It’s Valuable:

🔍 For ME:

- Quick Status Check: See at a glance if your code is working

- Historical Reference: Know the last known good state

- Confidence: Green badge = safe to deploy/share

👥 For Others:

- Trust Indicator: Shows your code is tested and working

- Professional Presentation: Demonstrates good development practices

📊 For Contributors:

- Pull Request Status: See if their changes break anything

- Fork Confidence: Know the original repo is well-maintained

- Quality Signal: Indicates a serious, well-tested project

🎖️ Professional Benefits:

When someone visits your repository, they immediately see:

- ✅ “This developer writes tests”

- ✅ “This code is actively maintained”

- ✅ “This project follows best practices”

- ✅ “I can trust this code quality”

It’s essentially a quality seal for your repository! 🎖️

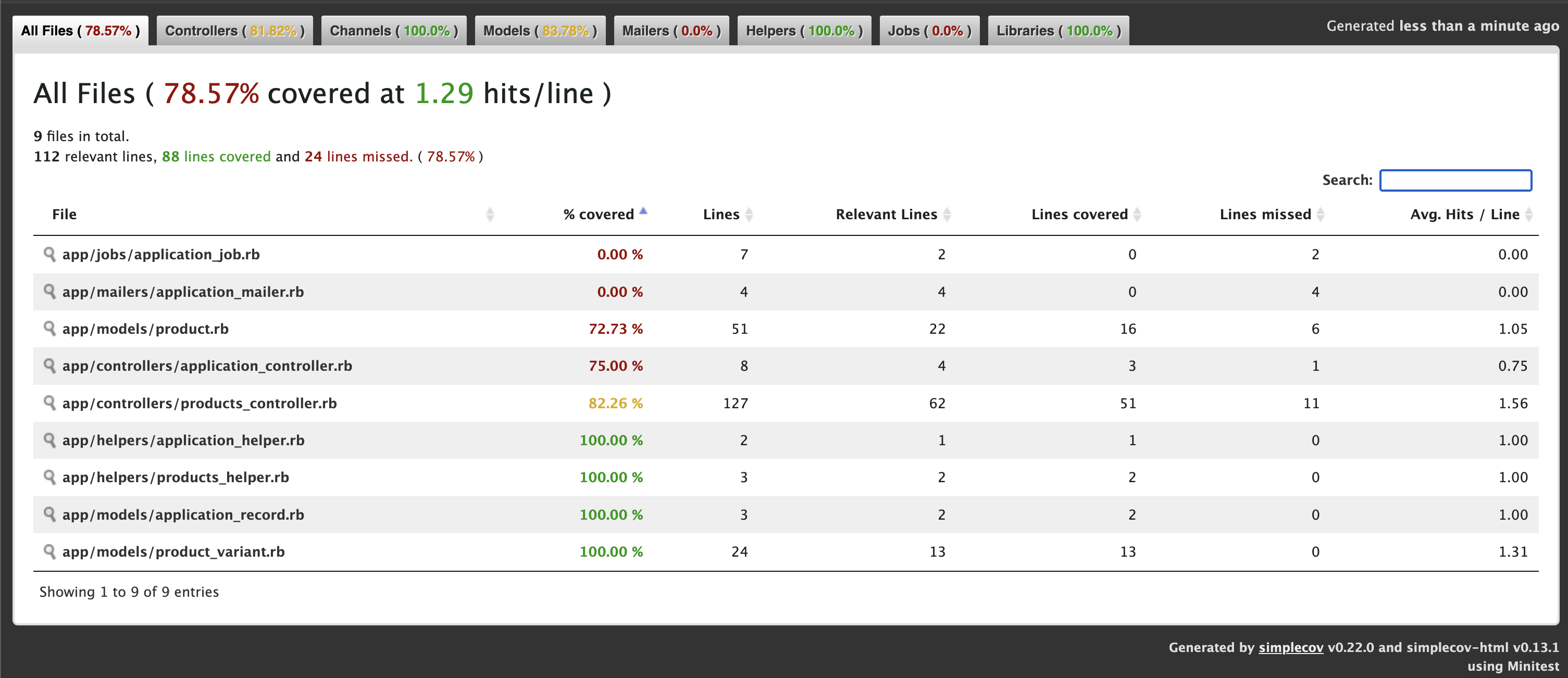

🎯 Results & Future Improvements

🎉 Current Success Metrics

- 100% automated testing across all solution folders

- Zero manual testing required for routine changes

- Instant feedback on code quality

- Professional presentation with status badges

🔮 Future Enhancements

📊 Performance Tracking

Planning to add execution time measurement:

start_time=$(date +%s%N)

ruby "$test_file"

end_time=$(date +%s%N)

execution_time=$(( (end_time - start_time) / 1000000 ))

echo " ⏱️ Execution time: ${execution_time}ms"

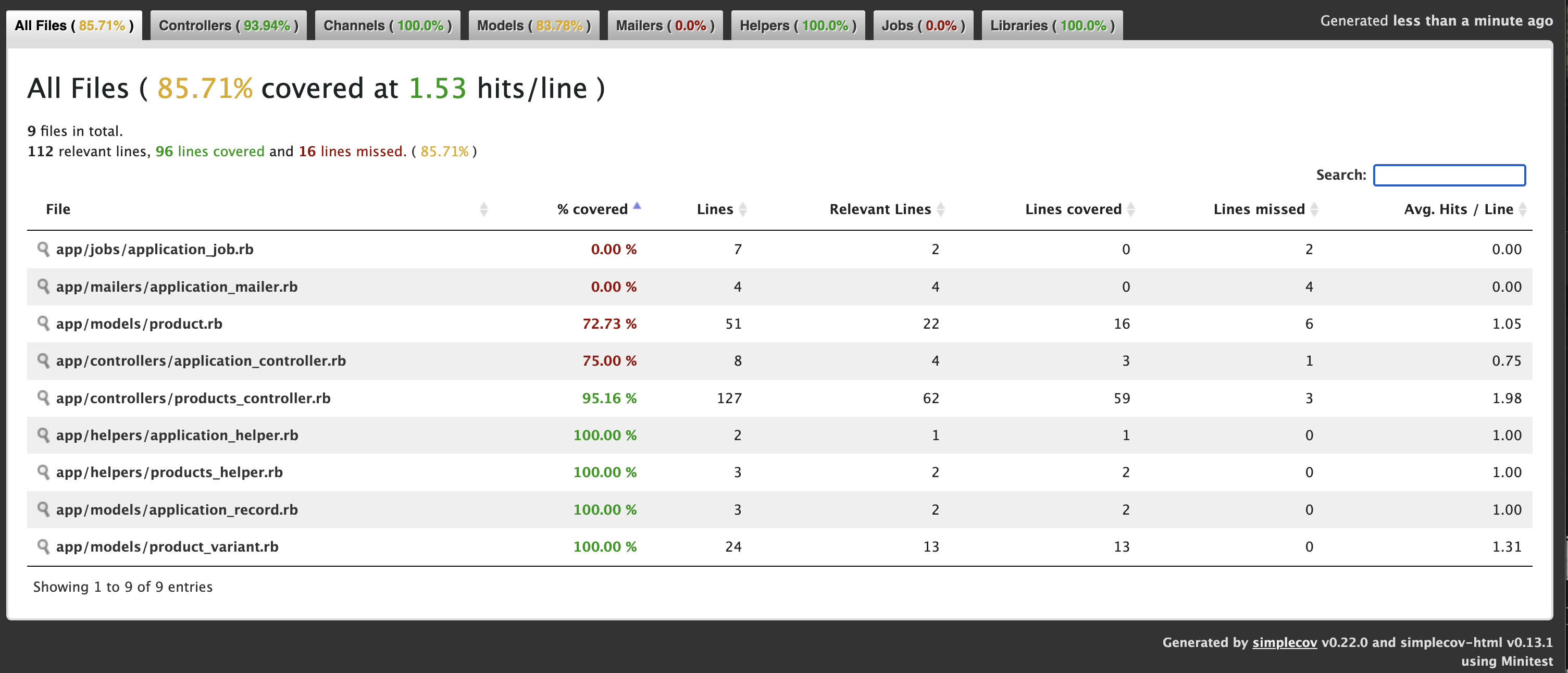

🎯 Test Coverage Reports

Considering integration with Ruby coverage tools:

- name: Generate coverage report

run: |

gem install simplecov

# Coverage analysis per folder

📈 Algorithm Performance Comparison

Auto-comparing different solution approaches:

# Compare solution_v1.rb vs solution_v2.rb performance

💡 Conclusion: Why This Matters

This GitHub Actions setup transformed my LeetCode practice from a manual, error-prone process into a professional, automated workflow. The key benefits:

🎯 For Individual Practice

- Confidence: Refactor without fear

- Speed: Instant validation of changes

- Quality: Consistent test coverage

🎯 For Team Collaboration

- Standards: Enforced testing practices

- Reviews: Clear CI status on pull requests

- Documentation: Professional presentation

🎯 For Career Development

- Portfolio: Demonstrates DevOps knowledge

- Best Practices: Shows understanding of CI/CD

- Professionalism: Industry-standard development workflow

🚀 Take Action

Ready to implement this in your own LeetCode repository? Here’s what to do next:

- Copy the workflow file into

.github/workflows/test.yml - Ensure consistent naming with

test_*.rbpattern - Push to GitHub and watch the magic happen

- Add the status badge to your README

- Start coding fearlessly with automated testing backup!

Check my github repo: https://github.com/abhilashak/leetcode/actions

The best part? Once set up, this system maintains itself. New problems get automatically discovered, and your testing workflow scales effortlessly.

Happy coding, and may your CI always be green! 🟢

Have you implemented automated testing for your coding practice? Share your experience in the comments below!

📚 Resources

🏷️ Tags

#GitHubActions #Ruby #LeetCode #CI/CD #DevOps #AutomatedTesting #CodingPractice